Between

The Between rule is used to ensure that numeric values stay within an expected and acceptable range.

It is commonly used to catch outliers, invalid measurements, and data entry or pipeline errors before they impact reports or downstream systems.

What is Between?

Think of Between as a “safety fence” for numeric values in your data.

Just like speed limits on a road prevent unsafe driving, the Between check ensures that numbers stay within boundaries that make sense for your business.

If a value goes below the minimum or above the maximum, it gets flagged immediately.

In simple terms: This number must fall within this range.

Add Between Check

Use the Between check when you want to validate that a numeric field stays within an expected minimum and maximum value.

This helps you detect:

- Unexpected spikes or drops

- Data entry mistakes

- Sensor or system malfunctions

- Broken transformations or unit conversion issues

What Does Between Do?

Between helps you answer questions like:

- “Is this quantity unreasonably low or high?”

- “Did a system send an invalid numeric value?”

- “Are measurements staying within realistic limits?”

- “Did a unit conversion break and inflate values?”

In short: It ensures your numbers stay realistic and trustworthy.

How Does Between Work?

Let’s break it down step by step.

Step 1: Choose a Numeric Field

You select a single numeric field, such as:

- Quantity

- Price

- Temperature

- Duration

- Weight

- Usage count

Step 2: Define the Acceptable Range

You specify:

- Minimum value

- Maximum value

- Whether each boundary is inclusive or exclusive

Example:

- Min = 5 (inclusive)

- Max = 20 (inclusive)

This means:

👉 5 ≤ value ≤ 20

Step 3: Validation Happens Automatically

For each record, the Between check evaluates:

- Is the value less than the minimum?

- Is the value greater than the maximum?

If yes → Anomaly detected

Step 4: Review the Results

The output shows:

- Pass – Value is within the allowed range

- Anomaly Found – Value falls outside the range

Why Should You Use Between?

1. Catch Invalid Values Early

A quantity of -3, 0, or 10,000 may technically be a number—but it may not make sense for your business.

Between catches these issues before they reach dashboards or customers.

2. Prevent Bad Decisions

Out-of-range values can:

- Skew averages

- Break charts

- Trigger false alerts

- Lead to wrong operational decisions

Between protects your analytics from bad inputs.

3. Save Manual Validation Time

Instead of scanning reports for suspicious numbers, Between continuously validates every new record automatically.

4. Increase Confidence in Data Quality

When stakeholders see numbers within expected bounds, trust in your data increases.

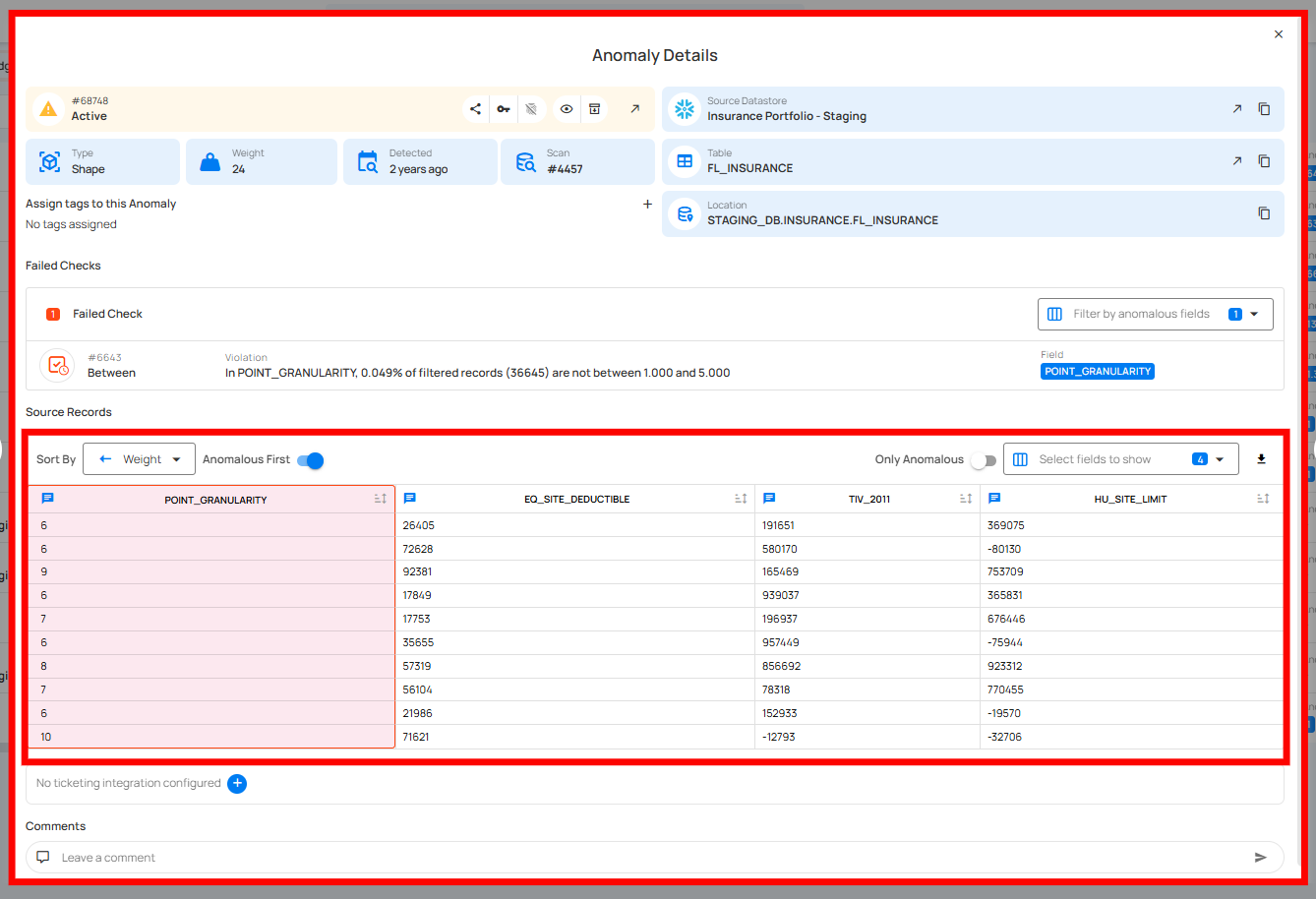

Real-Life Example: Insurance Location Risk Validation (Between Check)

The Situation

An insurance company maintains a property insurance portfolio in the FL_INSURANCE table.

This data is used by actuarial and catastrophe-risk models to calculate premiums and exposure.

One critical numeric field is POINT_GRANULARITY, which defines the resolution used for location-based risk calculations.

Based on business and modeling requirements:

- Minimum allowed value:

1.000 - Maximum allowed value:

5.000

Any value outside this range makes the risk calculation unreliable.

The Problem

During a routine data quality scan on the Insurance Portfolio – Staging datastore, analysts noticed unexpected behavior in downstream risk models.

There were:

- No missing records

- No schema mismatches

- No pipeline failures

However, some policies showed unexpected risk scores, even though the data appeared complete.

The Solution: Between Check

To validate numeric correctness, the data team configured a Between check on the POINT_GRANULARITY field with the following rules:

- Min:

1.000(inclusive) - Max:

5.000(inclusive)

This ensured that every insurance record used a valid granularity level for risk computation.

What the Between Check Discovered

OUT-OF-RANGE VALUES DETECTED

- Field:

POINT_GRANULARITY - Rule Applied: Between

- Violation: 0.049% of filtered records (36,645 total)

- Allowed Range: 1.000 to 5.000

Source Records (Anomalous Values)

| POINT_GRANULARITY | EQ_SITE_DEDUCTIBLE | TIV_2011 | HU_SITE_LIMIT |

|---|---|---|---|

| 6 | 26405 | 191651 | 369075 |

| 6 | 72628 | 580170 | -80130 |

| 9 | 92381 | 165469 | 753709 |

| 6 | 17849 | 939037 | 365831 |

| 7 | 17753 | 196937 | 676446 |

| 6 | 35655 | 957449 | -75944 |

| 8 | 57319 | 856692 | 923312 |

| 7 | 56104 | 78318 | 770455 |

| 6 | 21986 | 152933 | -19570 |

| 10 | 71621 | -12793 | -32706 |

These records were structurally valid but numerically invalid according to business rules.

Anomaly Interpretation

- The records existed in the table and passed schema validation

- Only the numeric constraint was violated

- Because a range rule failed, the anomaly was classified as a Shape anomaly

- This indicated a data quality issue, not missing or duplicate data

Root Cause Identified

Further investigation showed:

- An upstream ingestion change defaulted

POINT_GRANULARITYto higher values - No validation existed at the source system

- The issue silently propagated into staging analytics

The Outcome

Immediate Results - Invalid values were corrected - Risk models were recalculated using valid granularity levels

Long-Term Protection - The Between check now runs automatically on each scan - Any future out-of-range values are flagged immediately - Incorrect risk calculations are prevented before reporting or pricing

🔍 Key Takeaway

Between checks protect numeric fields that must stay within strict business limits.

In this case, the Between check caught values that were technically valid numbers—but business-invalid, preventing incorrect insurance risk assessments and downstream decisions.

Field Scope

Single: The rule evaluates a single specified field.

Accepted Types

| Type | |

|---|---|

Integral |

|

Fractional |

General Properties

| Name | Supported |

|---|---|

Filter Allows the targeting of specific data based on conditions |

|

Coverage Customization Allows adjusting the percentage of records that must meet the rule's conditions |

The filter allows you to define a subset of data upon which the rule will operate.

It requires a valid Spark SQL expression that determines the criteria rows in the DataFrame should meet. This means the expression specifies which rows the DataFrame should include based on those criteria. Since it's applied directly to the Spark DataFrame, traditional SQL constructs like WHERE clauses are not supported.

Examples

Direct Conditions

Simply specify the condition you want to be met.

Combining Conditions

Combine multiple conditions using logical operators like AND and OR.

Correct usage" collapsible="true

Incorrect usage" collapsible="true

Utilizing Functions

Leverage Spark SQL functions to refine and enhance your conditions.

Correct usage" collapsible="true

Incorrect usage" collapsible="true

Using scan-time variables

To refer to the current dataframe being analyzed, use the reserved dynamic variable {{ _qualytics_self }}.

Correct usage" collapsible="true

Incorrect usage" collapsible="true

While subqueries can be useful, their application within filters in our context has limitations. For example, directly referencing other containers or the broader target container in such subqueries is not supported. Attempting to do so will result in an error.

Important Note on {{ _qualytics_self }}

The {{ _qualytics_self }} keyword refers to the dataframe that's currently under examination. In the context of a full scan, this variable represents the entire target container. However, during incremental scans, it only reflects a subset of the target container, capturing just the incremental data. It's crucial to recognize that in such scenarios, using {{ _qualytics_self }} may not encompass all entries from the target container.

Specific Properties

Specify both minimum and maximum boundaries, and determine if these boundaries should be inclusive.

| Name | Explanation |

|---|---|

Max |

The upper boundary of the range. |

Inclusive (Max) |

If true, the upper boundary is considered a valid value within the range. Otherwise, it's exclusive. |

Min |

The lower boundary of the range. |

Inclusive (Min) |

If true, the lower boundary is considered a valid value within the range. Otherwise, it's exclusive. |

Anomaly Types

| Type | Supported |

|---|---|

Record Flag inconsistencies at the row level |

|

Shape Flag inconsistencies in the overall patterns and distributions of a field |

Example

Objective: Ensure that all L_QUANTITY entries in the LINEITEM table are between 5 and 20 (inclusive).

Sample Data

| L_ORDERKEY | L_QUANTITY |

|---|---|

| 1 | 4 |

| 2 | 15 |

| 3 | 21 |

{

"description": "Ensure that all L_QUANTITY entries in the LINEITEM table are between 5 and 20 (inclusive)",

"coverage": 1,

"properties": {

"min":5,

"inclusive_min":true,

"max":20,

"inclusive_max":true

},

"tags": [],

"fields": ["L_QUANTITY"],

"additional_metadata": {"key 1": "value 1", "key 2": "value 2"},

"rule": "between",

"container_id": {container_id},

"template_id": {template_id},

"filter": "1=1"

}

Anomaly Explanation

In the sample data above, the entries with L_ORDERKEY 1 and 3 do not satisfy the rule because their L_QUANTITY values are not between 5 and 20 inclusive.

graph TD

A[Start] --> B[Retrieve L_QUANTITY]

B --> C{Is 5 <= L_QUANTITY <= 20?}

C -->|Yes| D[Move to Next Record/End]

C -->|No| E[Mark as Anomalous]

E --> DPotential Violation Messages

Record Anomaly

The value for L_QUANTITY of 4 is not between 5.000 and 20.000.

Shape Anomaly

In L_QUANTITY, 66.67% of 3 filtered records (2) are not between 5.000 and 20.000.