Checks Overview

Checks in Qualytics are rules applied to data that ensure quality by validating accuracy, consistency, and integrity. Each check includes a data quality rule, along with filters, tags, tolerances, and notifications, allowing efficient management of data across tables and fields.

Let’s get started 🚀

Checks Types

In Qualytics, you will come across two types of checks:

Inferred Checks

Qualytics automatically generates inferred checks during a Profile operation. These checks typically cover 80-90% of the rules needed by users. They are created and maintained through profiling, which involves statistical analysis and machine learning methods.

For more details on Inferred Checks, please refer to the Inferred Check documentation.

Authored Checks

Authored checks are manually created by users within the Qualytics platform or API. You can author many types of checks, ranging from simple templates for common checks to complex rules using Spark SQL and User-Defined Functions (UDF) in Scala.

For more details on Authored Checks, please refer to the Authored Checks documentation.

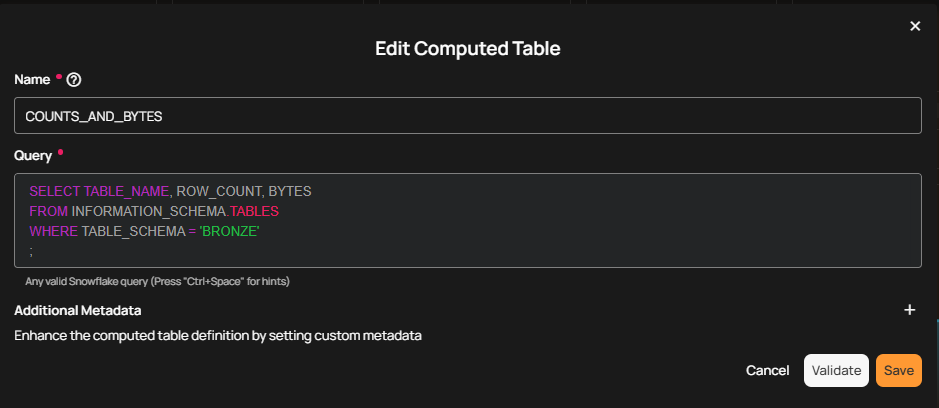

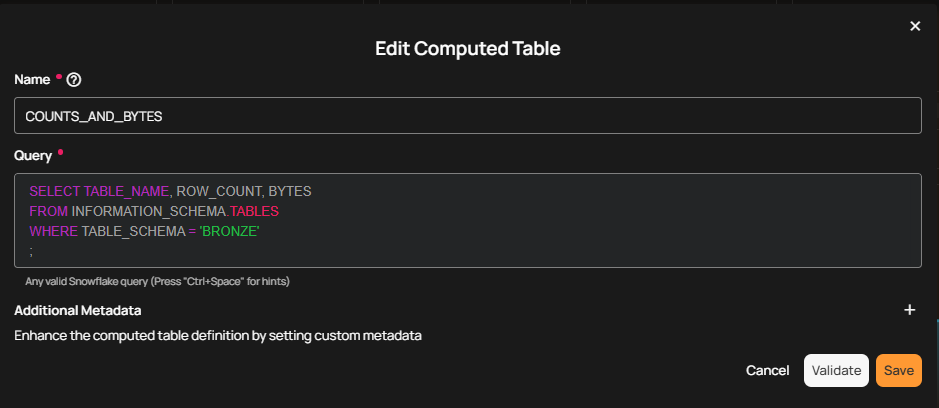

Editing Computed Assets from Checks

Qualytics allows users to edit computed tables and computed fields directly from check interfaces. This feature helps users quickly update computed logic while working on data quality checks—without leaving the check context.

What You Can Do

Edit Computed Assets from a Check

From a Check Details screen, users can:

- Edit the computed table used by the check

- Edit the computed field used by the check

The edit action opens the same Edit Computed dialog available from the container page, but directly within the check context. This allows users to quickly adjust logic when a check fails or needs refinement.

Keyboard Shortcut

E — Edit Computed Asset

When focused on a computed asset within a check:

- Press

Eto open Edit Computed - This opens the Edit Computed Table or Edit Computed Field dialog

Note

On this screen, the “E” keyboard shortcut is used to open Edit Computed.

Dependency Protection

Qualytics automatically protects dependencies when computed assets are edited.

When a Dependency Is Affected

If an edit to a computed table or field would impact an existing check—for example:

- Renaming or removing a field used by the check

- Modifying a query that drops a referenced column

Qualytics will display a warning message before the change is applied.

The warning clearly lists:

- Fields that will be removed

- Checks and anomalies that depend on those fields

Proceeding with the Change

If you choose Proceed Anyway:

- The affected check(s) will be deleted

- Related anomalies will also be removed

- Qualytics will automatically redirect you to the most appropriate page (for example, the container page)

This behavior ensures system consistency and prevents broken references.

Important Considerations

- There are no strict limitations on editing computed assets

- Changes may impact dependent checks

- Always review dependency warnings before proceeding

- To preserve an existing check, avoid removing or renaming fields it depends on

View & Manage Checks

Checks tab in Qualytics provides users with an interface to view and manage various checks associated with their data. These checks are accessible through two different methods, as discussed below.

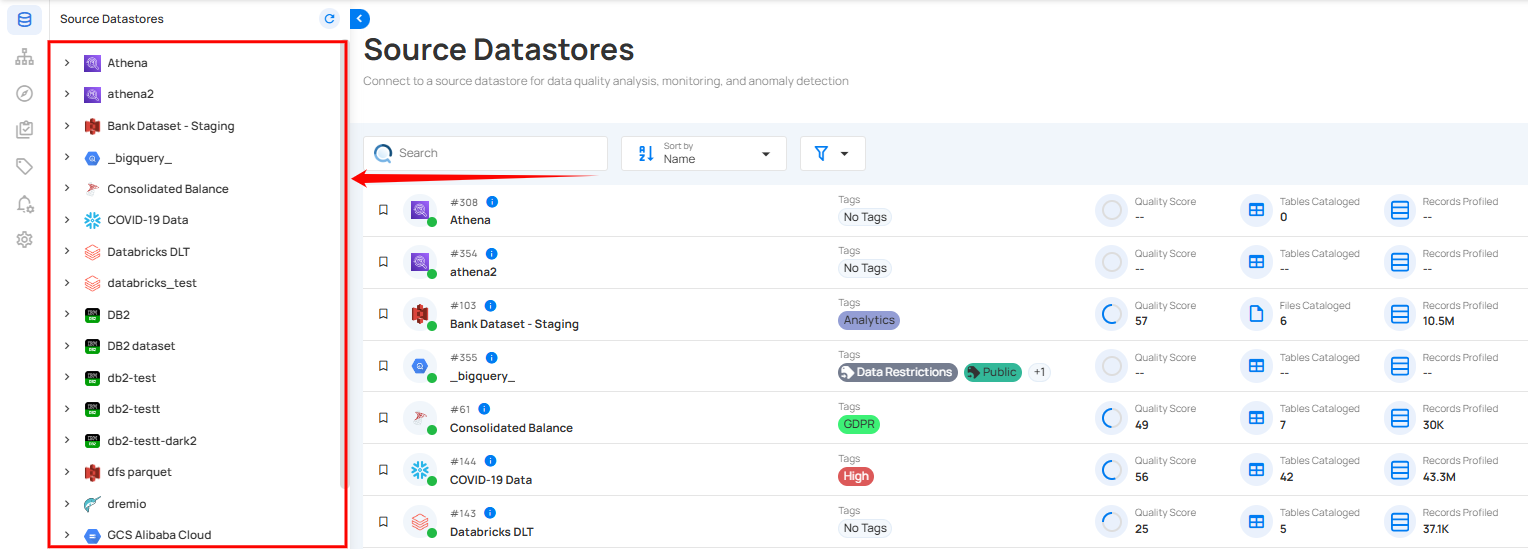

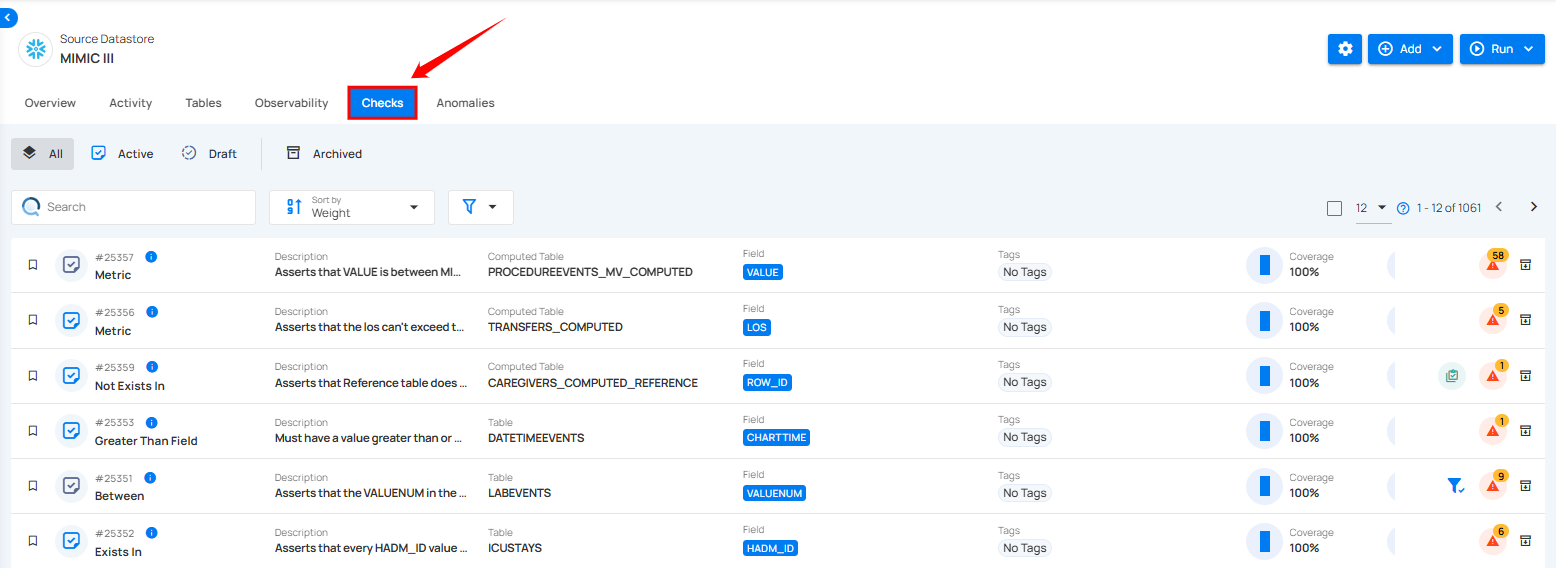

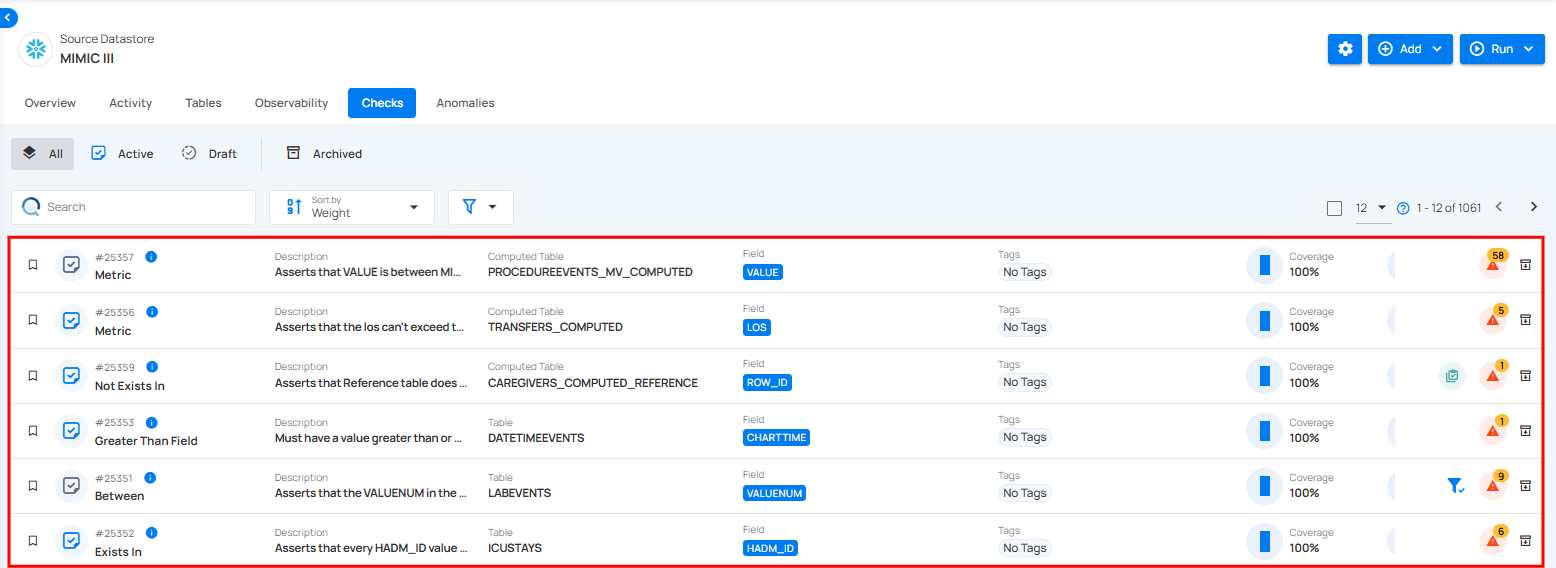

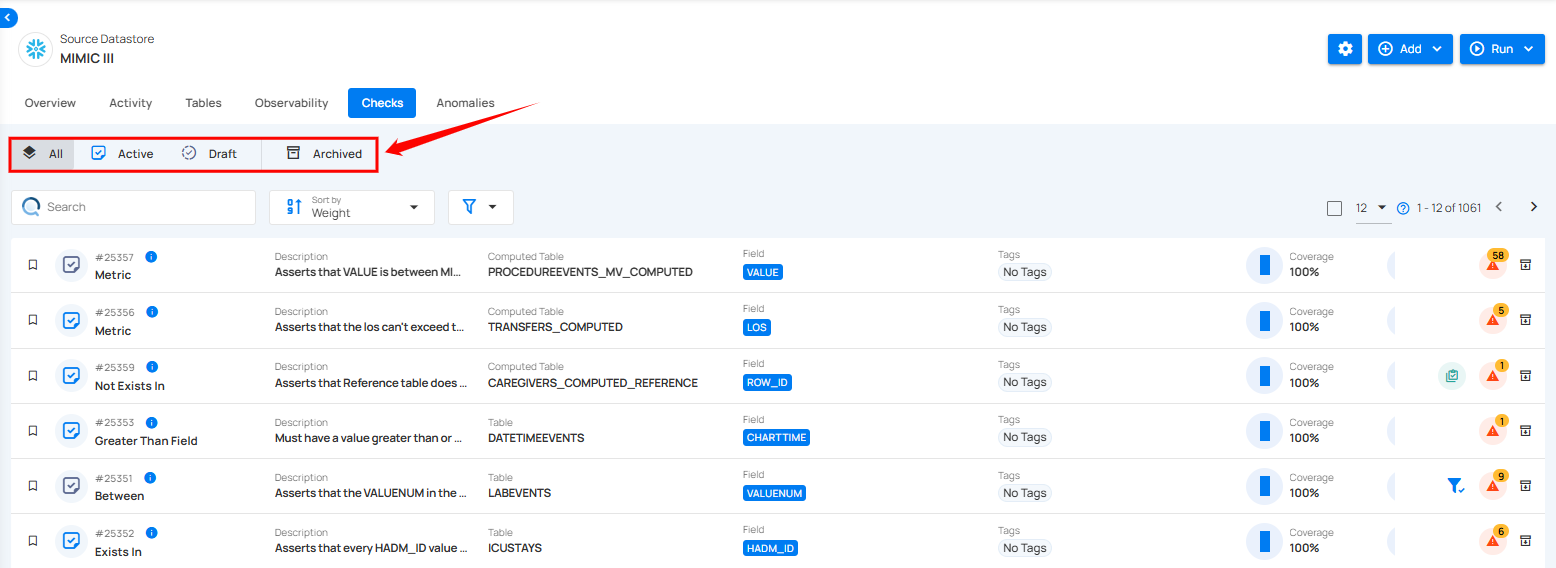

Method 1: Datastore-Specific Checks

Step 1: Log in to your Qualytics account and select the datastore from the left menu.

Step 2: Click the "Checks" from the navigation tab.

You will see a list of all the checks that have been applied to the selected datastore.

You can switch between different types of checks to view them categorically (such as All, Active, Draft, and Archived).

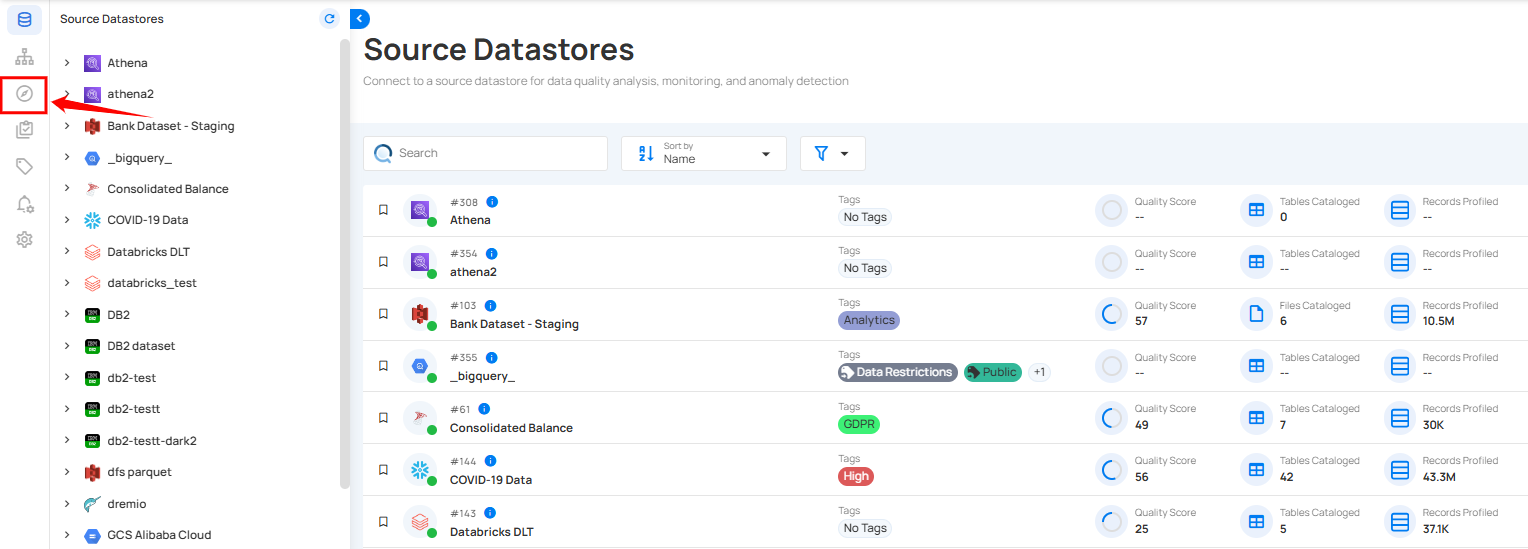

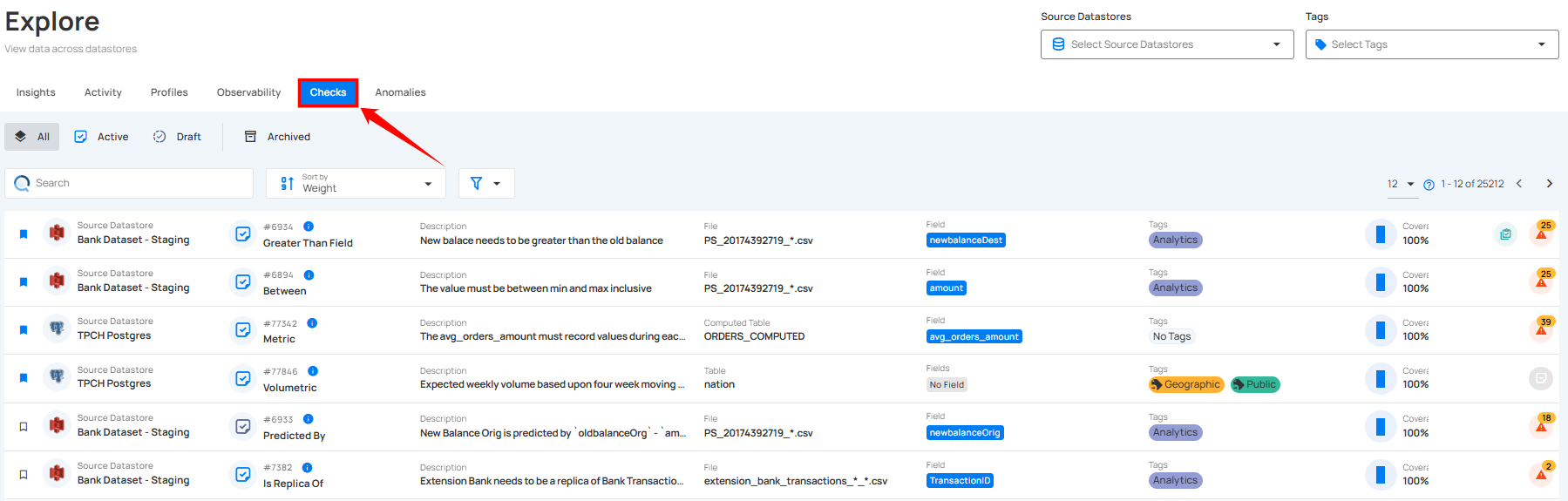

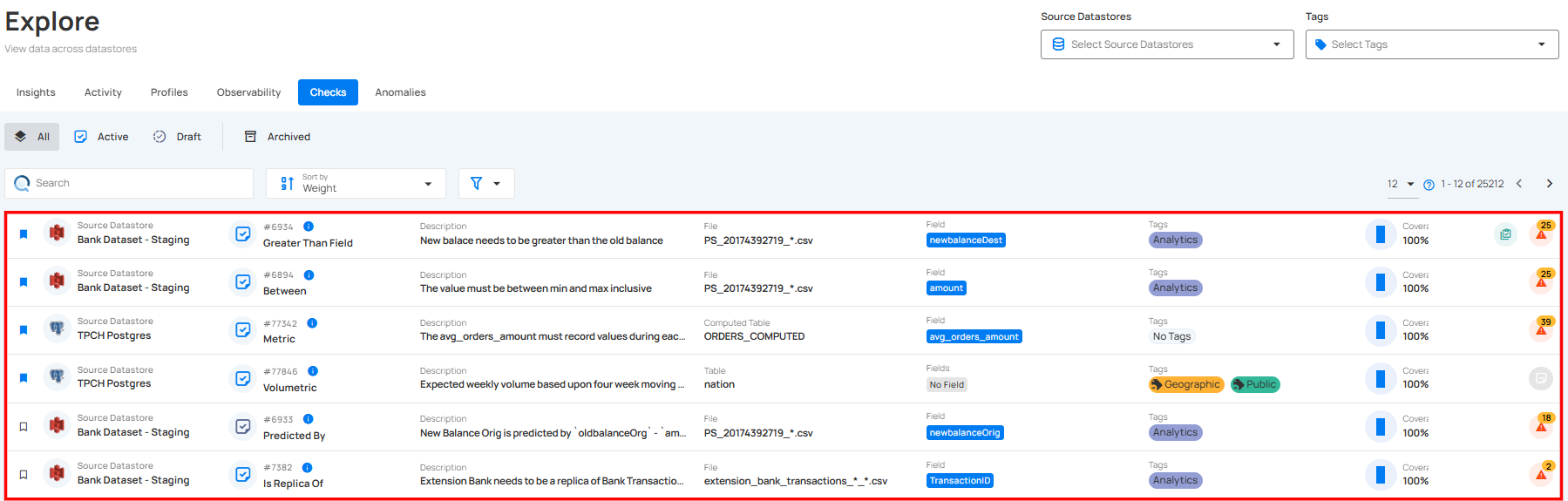

Method 2: Explore Section

Step 1: Log in to your Qualytics account and click the Explore button on the left side panel of the interface.

Step 2: Click the "Checks" from the navigation tab.

You'll see a list of all the checks that have been applied to various tables and fields across different source datastores.

Check Templates

Check Templates empower users to efficiently create, manage, and apply standardized checks across various datastores, acting as blueprints that ensure consistency and data integrity across different datasets and processes.

Check templates streamline the validation process by enabling check management independently of specific data assets such as datastores, containers, or fields. These templates reduce manual intervention, minimize errors, and provide a reusable framework that can be applied across multiple datasets, ensuring all relevant data adheres to defined criteria. This not only saves time but also enhances the reliability of data quality checks within an organization.

For more details about check templates, please refer to the Check Templates documentation.

Apply Check Template for Quality Checks

You can export check templates to make quality checks easier and more consistent. Using a set template lets you quickly verify that your data meets specific standards, reducing mistakes and improving data quality. Exporting these templates simplifies the process, making finding and fixing errors more efficient, and ensuring your quality checks are applied across different projects or systems without starting from scratch.

For more details on how to apply check templates for quality checks, please refer to the Apply Check Template for Quality Checks documentation.

Export Check Templates

You can export check templates to easily share or reuse your quality check settings across different systems or projects. This saves time by eliminating the need to recreate the same checks repeatedly and ensures that your quality standards are consistently applied. Exporting templates helps maintain accuracy and efficiency in managing data quality across various environments.

For more details about export check templates, please refer to the Export Check Templates documentation.

Manage Checks in Datastore

Managing your checks within a datastore is important to maintain data integrity and ensure quality. You can categorize, create, update, archive, restore, delete, and clone checks, making it easier to apply validation rules across the datastores. The system allows for checks to be set as active, draft, or archived based on their current state of use. You can also define reusable templates for quality checks to streamline the creation of multiple checks with similar criteria. With options for important and favorite, users have full flexibility to manage data quality efficiently.

For more details on how to manage checks in datastores, please refer to the Manage Checks in Datastore documentation.

Check Rule Types

In Qualytics, a variety of check rule types are provided to maintain data quality and integrity. These rules define specific criteria that data must meet, and checks apply these rules during the validation process.

For more details about check rule types, please refer to the Rule Types Overview documentation.

| Rule Type | Description |

|---|---|

| After Date Time | Asserts that the field is a timestamp later than a specific date and time. |

| Aggregation Comparison | Verifies that the specified comparison operator evaluates true when applied to two aggregation expressions. |

| Any Not Null | Asserts that one of the fields must not be null. |

| Before Date Time | Asserts that the field is a timestamp earlier than a specific date and time. |

| Between | Asserts that values are equal to or between two numbers. |

| Between Times | Asserts that values are equal to or between two dates or times. |

| Contains Credit Card | Asserts that the values contain a credit card number. |

| Contains Email | Asserts that the values contain email addresses. |

| Contains Social Security Number | Asserts that the values contain social security numbers. |

| Contains Url | Asserts that the values contain valid URLs. |

| Data Diff | Asserts that the dataset created by the targeted field(s) has differences compared to the referred field(s). |

| Distinct Count | Asserts on the approximate count distinct of the given column. |

| Entity Resolution | Asserts that every distinct entity is appropriately represented once and only once. |

| Equal To | Asserts that all of the selected fields equal a value. |

| Equal To Field | Asserts that this field is equal to another field. |

| Exists in | Asserts if the rows of a compared table/field of a specific Datastore exists in the selected table/field. |

| Expected Schema | Asserts that all selected fields are present and that all declared data types match expectations. |

| Expected Values | Asserts that values are contained within a list of expected values. |

| Field Count | Asserts that there must be exactly a specified number of fields. |

| Freshness Check | Asserts that data was added or updated in the data asset after a declared time. |

| Greater Than | Asserts that the field is a number greater than (or equal to) a value. |

| Greater Than Field | Asserts that this field is greater than another field. |

| Is Address | Asserts that the values contain the specified required elements of an address. |

| Is Credit Card | Asserts that the values are credit card numbers. |

| Is Replica Of (is sunsetting) | Asserts that the dataset created by the targeted field(s) is replicated by the referred field(s). |

| Is Type | Asserts that the data is of a specific type. |

| Less Than | Asserts that the field is a number less than (or equal to) a value. |

| Less Than Field | Asserts that this field is less than another field. |

| Matches Pattern | Asserts that a field must match a pattern. |

| Max Length | Asserts that a string has a maximum length. |

| Max Partition Size | Asserts the maximum number of records that should be loaded from each file or table partition. |

| Max Value | Asserts that a field has a maximum value. |

| Metric | Records the value of the selected field during each scan operation and asserts that the value is within a specified range (inclusive). |

| Min Length | Asserts that a string has a minimum length. |

| Min Partition Size | Asserts the minimum number of records that should be loaded from each file or table partition. |

| Min Value | Asserts that a field has a minimum value. |

| Not Exists In | Asserts that values assigned to this field do not exist as values in another field. |

| Not Future | Asserts that the field's value is not in the future. |

| Not Negative | Asserts that this is a non-negative number. |

| Not Null | Asserts that the field's value is not explicitly set to nothing. |

| Positive | Asserts that this is a positive number. |

| Predicted By | Asserts that the actual value of a field falls within an expected predicted range. |

| Required Values | Asserts that all of the defined values must be present at least once within a field. |

| Satisfies Expression | Evaluates the given expression (any valid Spark SQL) for each record. |

| Sum | Asserts that the sum of a field is a specific amount. |

| Time Distribution Size | Asserts that the count of records for each interval of a timestamp is between two numbers. |

| Unique | Asserts that the field's value is unique. |

| Volumetric | Asserts that the data volume (rows or bytes) remains within dynamically inferred thresholds based on historical trends (daily, weekly, monthly). |